AI Could Match a Nation’s Collective Genius

A Dire Warning from Anthropic’s CEO

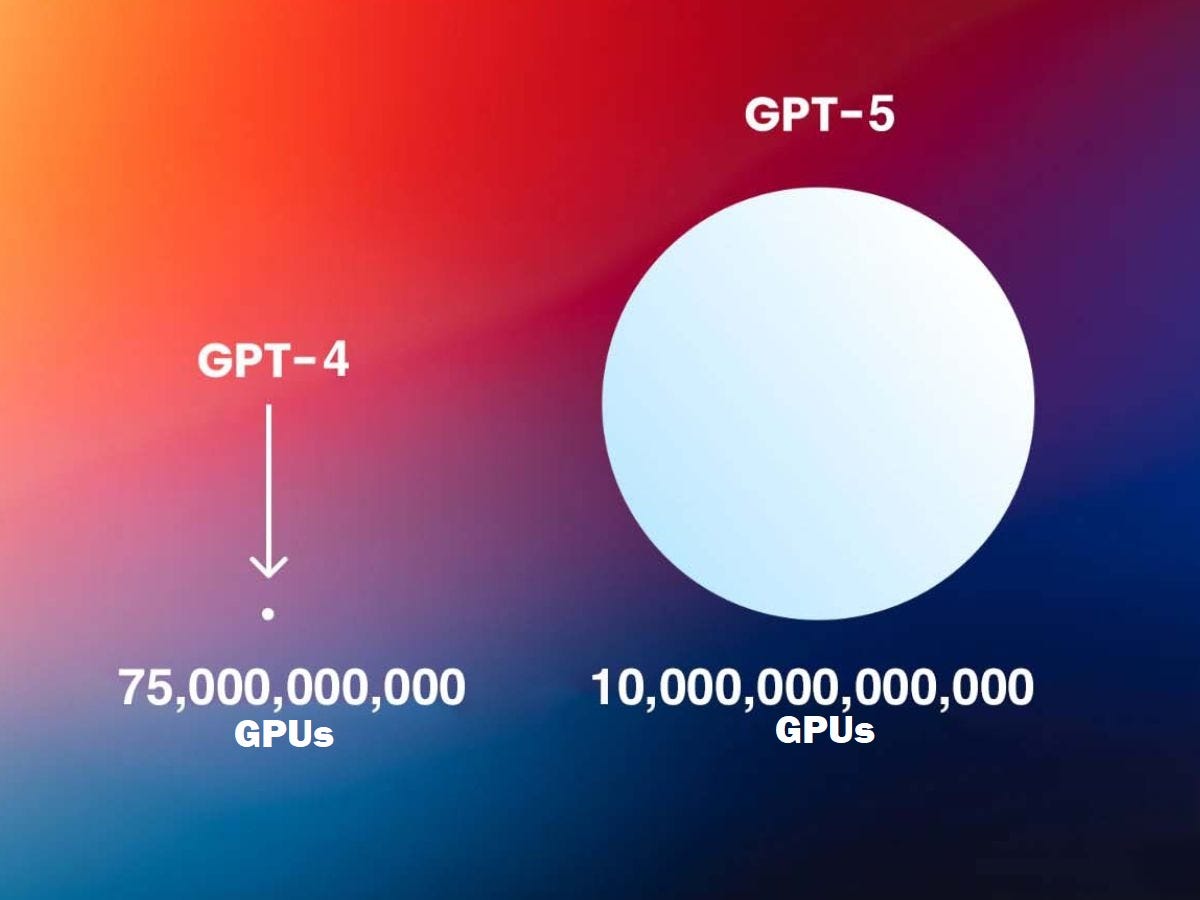

Dario Amodei, CEO of AI research company Anthropic, has issued a stark warning: artificial intelligence may achieve the collective intelligence of an entire country of geniuses by 2027. He believes that the rapid development of AI poses serious risks, and the global response has been too slow.

Speaking about the recent AI Action Summit in Paris, Amodei called it a “missed opportunity.” He criticized the lack of urgency in AI regulation, especially as both democratic and authoritarian nations race for dominance in this field. He urged democratic governments to take charge to prevent AI from being exploited for military and surveillance purposes.

Global Disagreements on AI Regulation

The Paris summit highlighted sharp divisions between countries on AI governance. The European Union proposed strict regulations, but U.S. Vice President J.D. Vance rejected them, arguing they would impose heavy compliance costs on small businesses. The U.S. and the U.K. even refused to sign the final agreement, signaling major challenges in global AI policy coordination.

Amodei stressed three key concerns:

- Maintaining democratic leadership in AI development

- Addressing security risks, including cyber threats

- Managing AI’s economic impact, especially on jobs

He also pointed to vulnerabilities in semiconductor production and supply chains, warning that disruptions could have widespread consequences.

Can Regulation Keep Up with AI’s Rapid Growth?

If AI truly reaches genius-level intelligence within the next few years, existing regulatory frameworks will be insufficient. Governments and tech companies must act quickly to establish effective oversight before AI systems become too advanced to control.

Balancing AI’s potential benefits with its risks is one of the greatest challenges of our time. Amodei warns that time is running out—if policymakers don’t act soon, they may lose their ability to shape AI’s future.

What do you think? Should governments impose strict AI regulations, or would that hinder innovation?