DeepSeek AI: A Billion-Dollar Investment Behind the Innovation

The Controversy Around DeepSeek’s Cost Claims

DeepSeek, a rising Chinese AI startup, recently announced that it had developed its latest model, R1, using just $6 million in computing costs. This bold claim suggested a major breakthrough in AI training efficiency, making DeepSeek a potential competitor to OpenAI. However, industry analysts from SemiAnalysis discovered that DeepSeek’s actual investment is far larger than advertised.

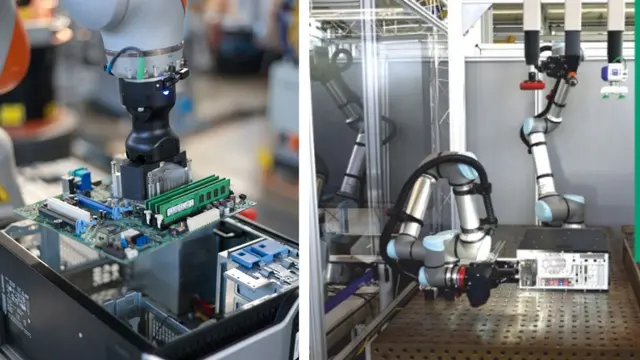

According to the report, DeepSeek has spent over $1.6 billion on computing infrastructure, including 50,000 Nvidia Hopper GPUs. The startup owns its own data centers, which allows it to control its AI training process without relying on external cloud providers. This revelation casts doubt on the idea that DeepSeek has achieved revolutionary AI advancements with minimal investment.

The Financial and Technical Power Behind DeepSeek

DeepSeek was originally part of the Chinese hedge fund High-Flyer, which began investing in AI technologies early on. In 2023, it became a standalone AI-focused company, funding itself entirely through internal capital. This structure gives it financial flexibility that many venture-funded AI startups lack.

SemiAnalysis reports that DeepSeek has invested over $500 million into AI research, training, and development. The company recruits top talent exclusively from mainland China, offering highly competitive salaries—some exceeding $1.3 million per year for AI researchers. Unlike many competitors, DeepSeek emphasizes practical skills and problem-solving abilities over formal academic credentials.

One of its key innovations is the Multi-Head Latent Attention (MLA) system, developed over months using massive GPU resources. Instead of merely increasing computing power, DeepSeek prioritizes optimizing its algorithms, aiming to reduce dependence on expensive GPUs. Some experts suggest that breakthroughs in this area could impact demand for high-end Nvidia GPUs, potentially affecting the broader AI hardware market.

The Reality Behind the $6 Million Training Cost

While DeepSeek’s claim of training R1 for just $6 million generated significant buzz, this figure only accounts for GPU expenses related to pre-training. It does not include research, fine-tuning, data processing, or infrastructure costs. In reality, DeepSeek has funneled hundreds of millions into AI development, making it clear that scaling artificial intelligence still requires massive financial backing.

Unlike large corporations burdened by bureaucracy, DeepSeek’s lean structure allows it to move quickly in AI development. However, as Elon Musk has pointed out, staying competitive in AI requires billions in annual investments—something DeepSeek is clearly prepared to do.

DeepSeek AI: Conclusion

DeepSeek’s success is not the result of a miraculous cost-saving breakthrough, but rather a well-funded, strategically planned investment in AI technology. While it has made progress in optimizing AI efficiency, the company’s claims of training a powerful model with minimal expenses are misleading. As the AI arms race intensifies, DeepSeek proves that significant capital investment remains a key factor in driving technological innovation.